Jurgen Appello is, by his own description, "a Dutch guy" who is somewhat of a humorist, an illustrator, and a proponent of Agile. He also writes a lot about complexity, and the effects of complexity on systems and projects.

A recent posting,

"The Normal Fallacy", takes on both misconceptions and lazy thinking, and reinforces the danger of thinking everything has a 'regression to the mean'.

Before addressing whether Appello's explanation of a fallacy is itself falacious, it's worth a moment to review:

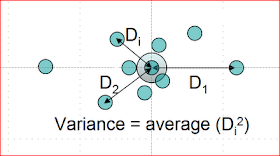

Complex systems differ from simple systems from the perspective of how we observe and measure system behaviour.

It's generally accepted that the behaviour of complex systems can not be predicted with precision; they are not deterministic from the observer's perspective.

[I say observer's perspective, because internally, these systems work the way they are designed to work, with the exception of Complex Adaptive Systems, CAS, for which the design is self-adapting]

Thus, unlike simple systems that often have closed-form algorithmic descriptions, complex system are usually evaluated with models of one kind or another, and we accept likely patterns of behaviour as the model outcome. ["Likely" meaning there's a probability of a particular pattern of behaviour}

Appello tells us to not have a knee jerk reaction towards the bell-shaped Normal distribution. He's right on that one: it's not the end-all and be-all but it serves as a surrogate for the probable patterns of complex systems.

In both humorous and serious discussion he tells us that the Pareto concept is too important to be ignored. The

Pareto distribution, which gives rise to the 80/20 rule, and its close cousin, the

Exponential distribution, is the mathematical underpinning for understanding many project events for which there's no average with symmetrical boundaries--in other words, no central tendency.

His main example is a customer requirement. His assertion:

The assumption people make is that, when considering change requests or feature requests from customers, they can identify the “average” size of such requests, and calculate “standard” deviations to either side. It is an assumption (and mistake)... Customer demand is, by nature, an non-linear thing. If you assume that customer demand has an average, based on a limited sample of earlier events, you will inevitably be surprised that some future requests are outside of your expected range.

In an earlier posting, I went at this a different way,

linking to a paper on the seven dangers in averages. Perhaps that's worth a re-read.

So far, so good. BUT.....

Work package pictureThe Pareto histogram [commonly used for evaluating

low frequency-high impact events in the context of many other small impact events], the Exponential Distribution [commonly used for evaluating system device failure probabilities], and the Poisson Distribution, which Apello doesn't mention, [commonly used for evaluating arrival rates, like arrival rate of new requirements] are the team leader's or work package manager's view of

the next one thing to happen.

Bigger pictureBut project managers are concerned with the collective effects of dozens, or hundreds of dozens of work packages, and a longer time frame, even if practicing in an Agile environment. Regardless of the single event distribution of the next thing down the road, the collective performance will tend towards a symmetrically distributed central value.

For example, I've copied a picture from a statistics text I have to show how fasts the central tendency begins. Here is just the sum of two events with Exponential distributions [see bottom left above for the single event]:

For project managers, central tendency is a

'good enough' working model that simplifies a visualization of the project context.

The Normal curve is common surrogate for the collective performance. Though a statistician will tell you it's rare that any practical project will have the conditions present for truly a Normal distribution, again:

It's good enough to assume a bell shaped symmetric curve and

press on.